I was asked to provide response rate data to our board earlier this month. During this process, I started to notice some inconstancies in how the "Response Rate" is calculated under the Surveys section. I think I identified the situation and here is my example:

Participants: 1000

# of Survey Emails: 2 (initial and follow up)

Maximum # of Emails: 2000

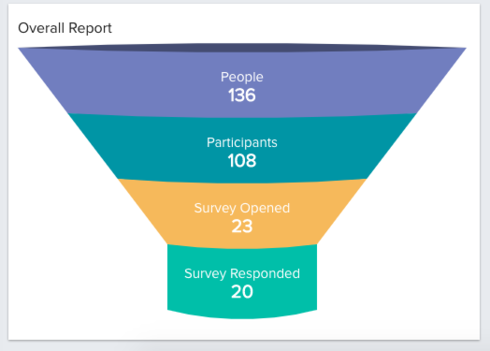

Say that 100 people reply to the survey and all of those wait to reply until they receive the follow-up email. This would me we have 2000 emails sent and 100 survey responses. I would expect that the response rate for this survey is 10% (100 submissions divided by 1000 invited participants).

HOWEVER, I am seeing the response rate in a scenario like this be 5% (100 responses divided by 2000 emails sent). In my opinion, the denominator should be the # of participants, not the number of emails.

Am I missing something?

Do others see this as well?

Thanks!

Sign up

If you ever had a profile with us, there's no need to create another one.

Don't worry if your email address has since changed, or you can't remember your login, just let us know at community@gainsight.com and we'll help you get started from where you left.

Else, please continue with the registration below.

Welcome to the Gainsight Community

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.