Hey all!

In a topic from December, I alluded to the fact that we’re trying to tackle the increasing spam problem with some out of the box thinking and promised a write up once we had things a bit more figured out. While I can’t say we have everything figured out, we’ve got enough to share!

So what’s the problem?

When it comes to spammers and community managers, it’s like a giant lazy game of chess. Your goal is to exert the least amount of effort to beat your opponent. When it comes to spammers, they post obvious junk, we build filters. They inundate us with spam at certain hours, we implement pre-mod processes. They post craftier spam, we implement spam detectors. And so on. Their move, our move.

Their goal is to post as much spam as they can get away with using the least effort possible. The goal for us is to ultimately make the work required to spam not worth the effort.

But more recently, I’ve been noticing a serious uptick in new spam strategies. When needed, spam has definitely starting moving on from the classic “This definitely does not belong in this community” to seemingly normal looking posts - often crafted with AI - with sneaky malicious links or spamdexing hidden amongst text. Built in spam detectors have a hard time distinguishing between normal content and spam, often leading to false positive or just totally missing the spam altogether. Like this…

Allowing spam of any sort in our community does not align with our desired customer experience. And with the rise of penalties and potential legal consequences for spam and malicious links, finding a solution to this issue was important for us - and communities everywhere.

What are we working on?

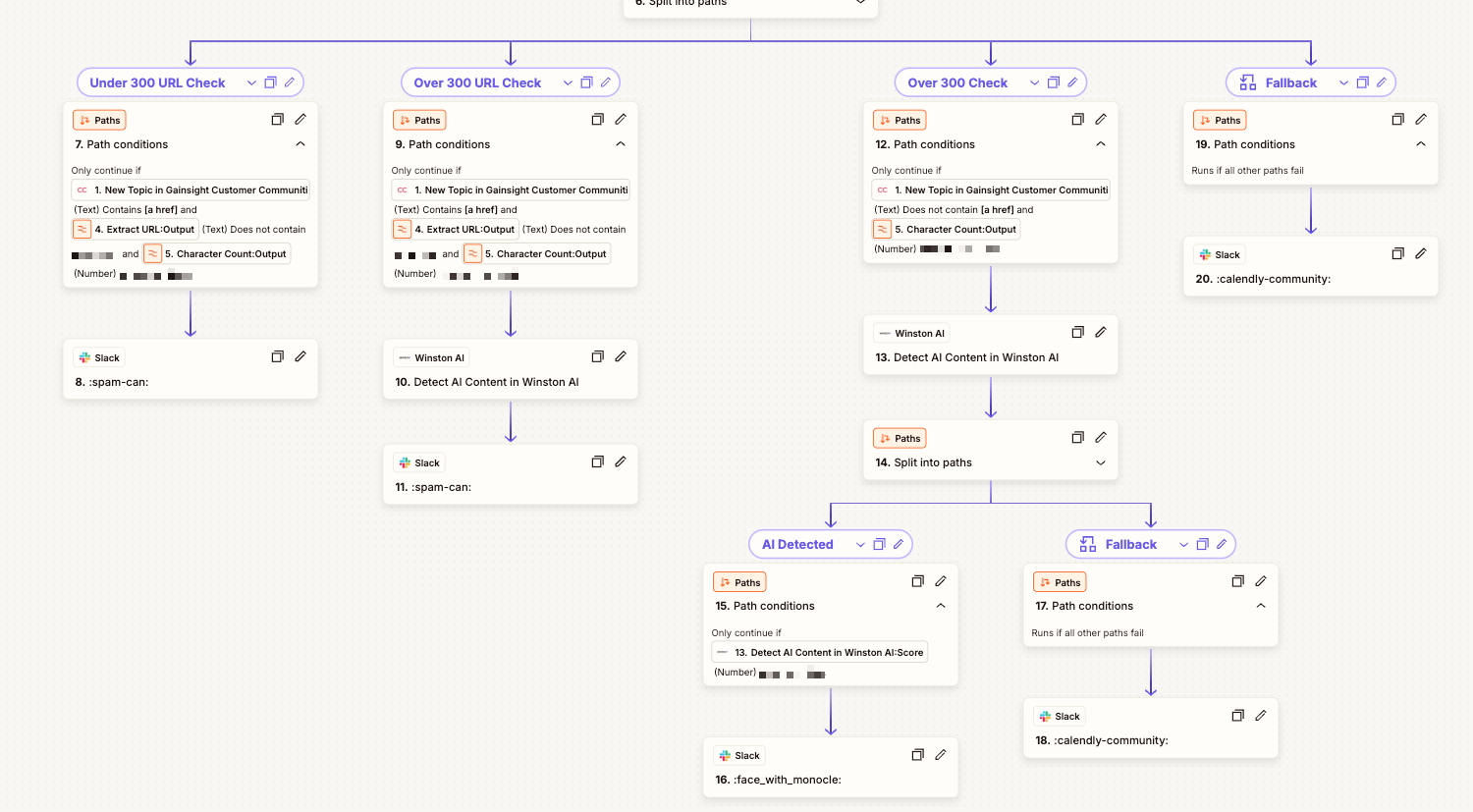

Since we started, we’ve been using Zapier to help us automate Slack alerts around new content and helping us detect URLs. It’s helped us respond to authentic posts and remove spam super fast.

But AI generated content was proving problematic. It often starts off with a post that looked kind of helpful, but really similar to a previous reply rendering it fishy and rather unhelpful. And then a few days later from the same user, another kind of helpful looking post… but this time with a craftily hidden link that just didn’t align with the post. Sure enough with a quick check of the user account and URL… spammer! If only we had been able to kick the spammer to the curb with the first post.

Knowing that there’s been an impressive rise of AI detection tools out there for education, journalism, and related fields - we decided to see if there was anything out there that could adapt to our needs and possessed a strong Zapier integration.

Turns out a Canadian based AI detection and linguistic modeling tool called Winston AI was just the ticket. After some initial testing, a chat with the co-founder, and going through procurement on our end - we got the keys to our fancy new tool.

What has been the outcome… so far?

We’re about 6 weeks into our pilot so far and as my peer and I were just discussing, we’re starting to see less spam all around. Whatever does come through is either back to the generic stuff our spam detector can catch or being caught by our dual URL and AI detection Zap. We won’t call it checkmate yet, but having yet another spam fighting tool in our pocket has been helpful.

And no, it’s not perfect. False positives are still very real, but one of the key strategies in our process was to reduce the amount of legitimate content getting caught in spam quarantine. Our URL and AI Zap alerts us (via Slack) of detected content, and that’s all. This ensures that users who are posting legit URLs (outside of our domain) and those who use AI to organize their thoughts or translate are still having the best possible customer experience we can provide.

We’re going to keep iterating, testing, and innovating - but we’re excited to have tried something new and we’re even more thrilled to see it having a positive impact.

Pro Tip: Include in your community’s policies and guidelines that AI generated content that is possibly misleading or spam in nature can and will be removed, along with the user potentially banned. This gives you leeway to remove content and users as needed to protect your community.

What could use improvement still?

Some of the areas that we don’t have dialed in are unfortunately areas outside of control at the moment, so here’s a few that we’d love for Gainsight to consider.

- Zapier Integration - HTML and Plain Text Content

- Gainsight’s Zapier integration has a field called ‘Content’ (logically… duh) which is great, except it comes through as HTML. AI detection tools don’t do a great job with HTML unfortunately (given the intended use case; education, journalism, etc.) and will often throw a false positive on AI generated content.

- To sort of get around this, we initially tried using Zapier’s formatter tool to strip the HTML but this ended up removing all the formatting typical of AI generated content (looking at you bulleted lists). We’re now leveraging Zapier’s formatter to convert to Markdown which is better, but introduces some wacky line breaks which we suspect are also impacting AI detection to some extent.

- Could we consider adding in a ‘plain text’ content field to the Zapier integration?

- Mark users as needing approval to post

- I’m not saying us community managers have superpowers or anything, but we’re pretty good at knowing when someone is fishy. But we’d like to also give people the benefit of the doubt and not throw a ban out there immediately.

- Gainsight… can we please have the functionality to mark a user as needing post approval? Better yet, can we assign to a role that requires post approval?

Any questions, throw them my way!