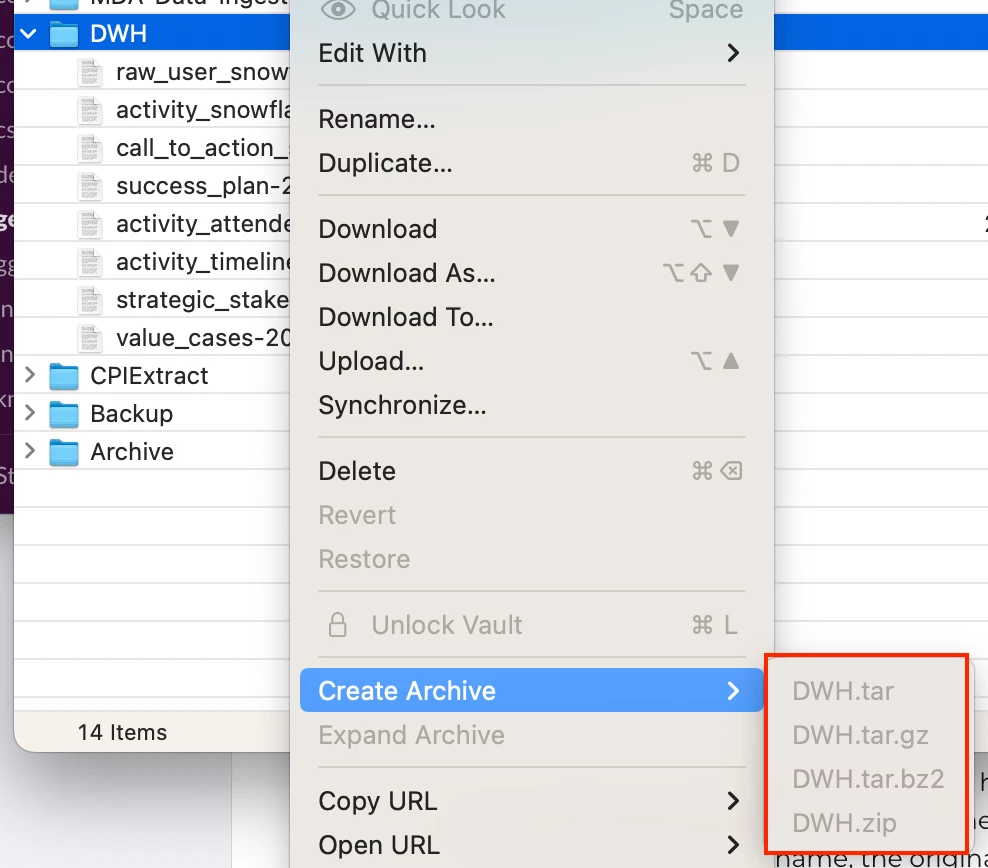

I am setting up a handful of rules to export data daily from Gainsight to S3, where our team is going to pull the data to ingest into our DWH. The rules are creating tsv files in S3. We want to enable archiving for these rules such that if the rule ran today and created a file with today’s datetime stamp, it would save in our DWH folder, and a file with the same nomenclature from the same rule with an earlier datetime stamp (aka yesterday’s datetime stamp) would automatically archive.

For our DWH, we wanted to be able to archive old files upon creation of new files to have a better audit trail in the event rules fail.