This article provides frequently asked questions about AI Moderation in Customer Community.

Availability and Setup

On which plan is the Moderation AI Agent available?

The Moderation AI Agent is available for all Customer Community plans at no additional charge.

Why is the Moderation AI Agent free of charge while other AI features, like AI Translations, are a paid add-on?

The Moderation AI Agent is included at no additional cost because Gainsight considers powerful moderation a core trust and safety feature that every community needs.

Can the Moderation AI Agent be turned off or customized?

Yes. The Moderation AI Agent can be turned on or off as needed. It can also be fully customized to align with your community's rules or Code of Conduct.

Does the Moderation AI Agent support multiple languages?

Yes. The Moderation AI Agent is language-agnostic and analyzes content in any language.

User Experience

What does this look like for a community user when they post a topic or reply? Is there a noticeable delay in an acceptable or positive post appearing?

Once posted, the Moderation AI Agent reviews the post using the Code of Conduct provided and approves it if it complies.

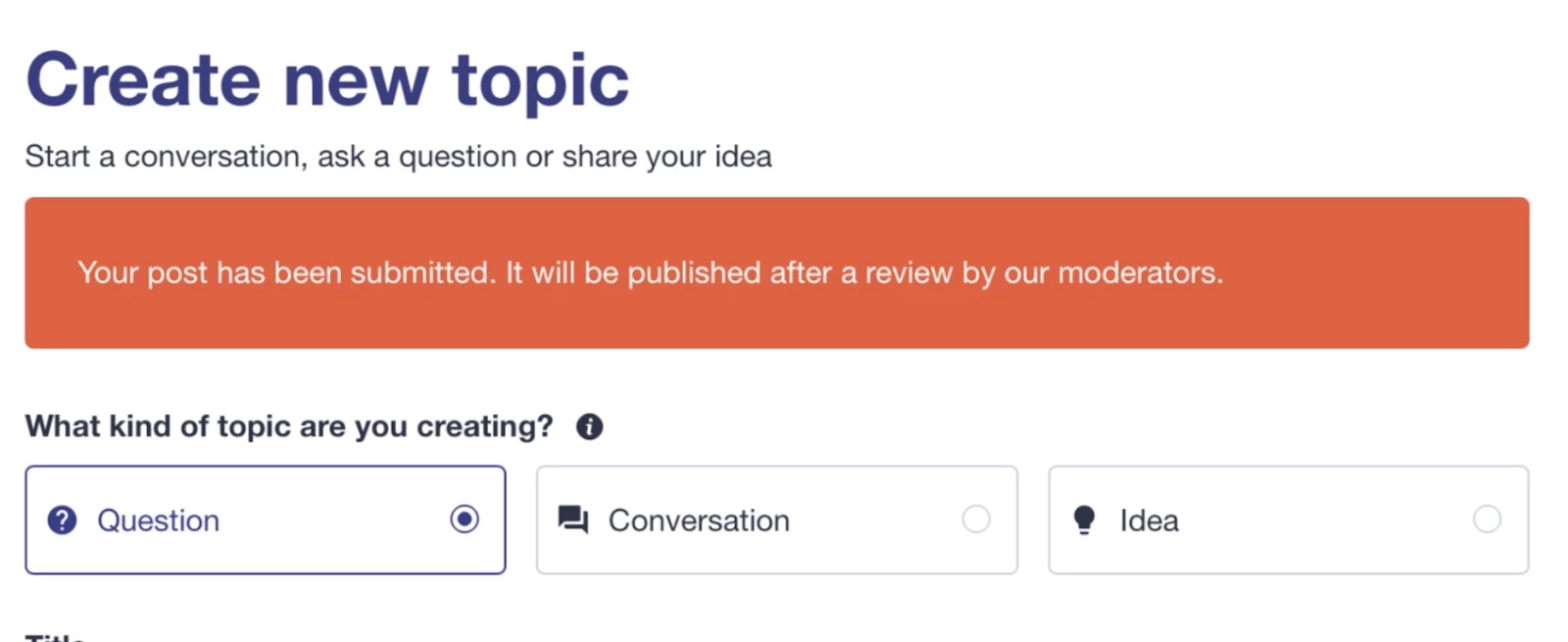

Which topic types are supported?

Questions, Conversations, and Replies to each of these topic types are supported.

Will users be notified if their post is held for moderation?

By default, posts are moderated without user notifications. However, your community team can notify authors via the Notify Authors button in the Control.

How Moderation Works

How does the Moderation AI Agent perform its automations?

Each piece of user-generated content is first run through OpenAI’s Moderation API to meet baseline safety standards. Content that passes is then evaluated by the Moderation AI Agent using GPT-4.1. The agent validates the post against the community’s Code of Conduct and checks for NSFW, PII, and spam violations. The outcome is scored, tagged, and classified without human intervention.

How does the Moderation AI Agent decide when to Approve or Trash posts?

Moderation AI Agent is designed to evaluate the content based on below scoring factors:

- Score range 0.0 – 0.4: Approved automatically.

- Score range 0.4 – 0.7: Pending review by a Community Manager.

- Score range 0.7 – 1.0: Trashed and reported, but available for Community Manager review.

How are false positives and false negatives handled?

Uncertain cases are marked as Pending for human review. PII is auto-redacted and approved. Moderators can approve and restore trashed items, review moderator tags, and confirm reporting reasons for audit purposes.

What happens to flagged or trashed content?

Topics and replies that are reported with a human-readable reason are trashed. Pending posts remain visible only to your community team until resolved.

How quickly does the Moderation AI Agent process content?

Typically, within a few seconds. Posts with URLs or images may take longer due to external fetching and analysis.

Will the Moderation AI Agent operate fully autonomously, with no human review?

No. The Moderation AI Agent makes one of three decisions: Approved, Removed (Trashed), or Pending. When the agent is not confident, the post is marked Pending for manual review by your community team.

Roles and Responsibilities

What role do Community Managers play?

Content marked as Pending is held for manual review. The Moderation AI Agent provides concise summaries via moderator tags so Community Managers can make rapid, informed decisions.

Which user is responsible for the actions of the Moderation AI Agent?

Moderation actions are associated with the community's AI-moderator user, a default type of user present in all communities using the Moderation AI Agent.

Performance and Risk

How can moderation performance be tracked?

Moderation actions can be tracked from the Content Moderation widget, available on the homepage. Full details are available in Content Overview as well as the Trash, Reported, and Pending queues.

Is there a risk that individuals could be negatively affected by the AI use case?

The Moderation AI Agent is designed as a net positive for every community. It enhances trust and safety, reduces spam, keeps conversations on topic, and ensures a safe space for all members. It also serves as an effective assistant for Community Managers, Moderators, and Admins.

Would service failure generate significant financial or reputational risk to the business?

If the service fails, standard manual community moderation procedures would resume until normal service is restored.

Data, Privacy, and Compliance

Is user data stored or shared with third parties?

Content is processed transiently and sent to Gainsight’s provider, OpenAI, for analysis. Gainsight enforces a Zero Day Retention policy and does not permit training on any customer data. Personally identifiable information (PII) is redacted where possible before storing outputs.

Does the Moderation AI Agent retrain community data?

No. Customer data is not used to train the underlying models for Gainsight or its external providers.

Is the Moderation AI Agent used in sensitive contexts such as recruitment, safety, or vulnerable customer identification?

No. The Moderation AI Agent is not a sole decision-maker in high-risk scenarios. Sensitive cases use the Pending flow with human review to ensure accuracy and fairness.

Application Programming Interface (API) Changes

Does the Moderation AI Agent make any changes to the API?

Yes, when the Moderation AI Agent is implemented for the first time, four subscriptions are added to the web-hook API. These hooks are conversation.Replied, conversation.Started, question.Asked, and question.Replied. These are used by the API to listen for changes within your site, and moderate the content when a user starts or replies to a conversation or question within your community.