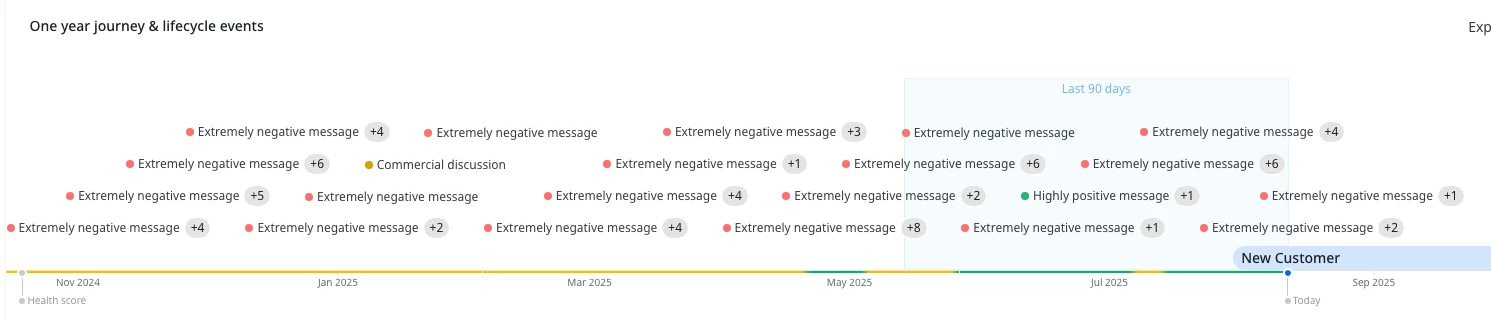

I am finding that a lot of times the “Extremely negative message” Lifecycle event is not what we would consider “Extremely Negative”. In many cases it may have negative sentiment, but it is not in the Extreme Category. What is the best way to calibrate this? I’ve been going through and trying to delete those that it has incorrectly classified, but I’m not sure that is helping to train the model. Any tips for how to improve this?

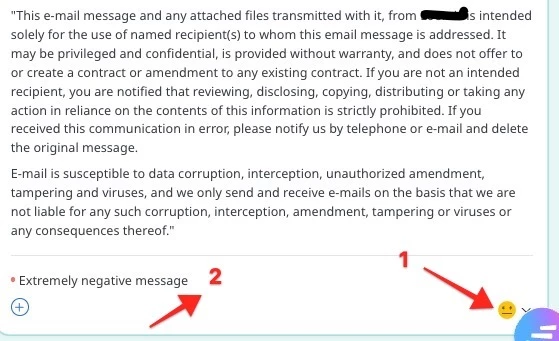

We have some cases where we have messages in foreign languages being translated via Chat GPT and it is flagging almost every message as extremely negative, even though in most cases they are simply reporting issues or asking for status updates as part of routine business. As you can imagine, a view like this is alarming for our account owners to see, and not accurately representing the customer relationship.